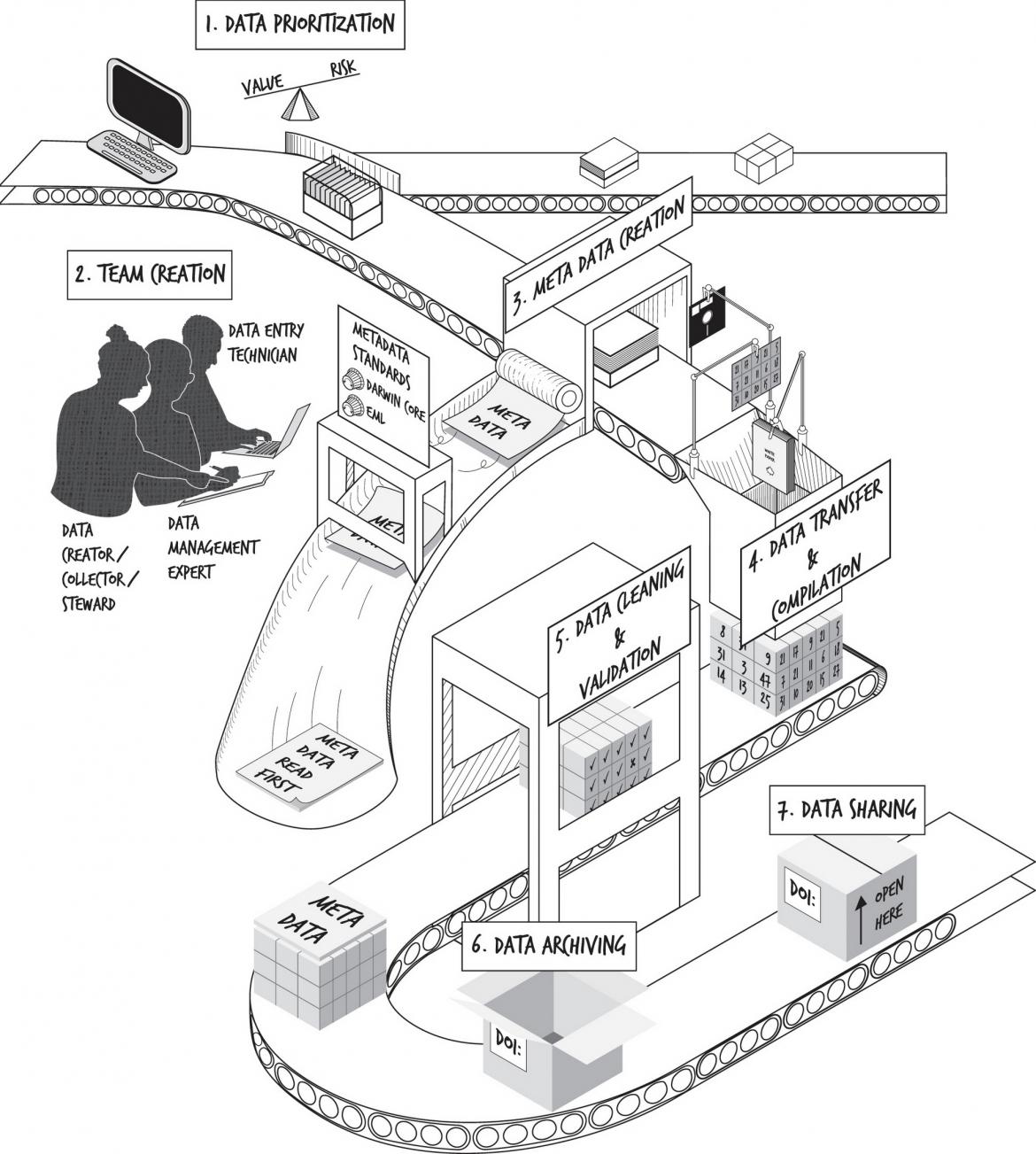

Figure 2. Steps in the data rescue assembly line. First, data must be prioritized for rescue (Step 1). After team creation (Step 2) and metadata creation (Step 3), the data must be transferred and compiled into a logical format (Step 4). After data cleaning and validation (Step 5) is complete, the finalized data and metadata should be archived on a long-term data repository (Step 6). The ultimate goal is to have the rescued data openly available for re-use (Step 7). Alt-text is available in the electronic supplementary material.

Abstract

Historical and long-term environmental datasets are imperative to understanding how natural systems respond to our changing world. Although immensely valuable, these data are at risk of being lost unless actively curated and archived in data repositories. The practice of data rescue, which we define as identifying, preserving, and sharing valuable data and associated metadata at risk of loss, is an important means of ensuring the long-term viability and accessibility of such datasets. Improvements in policies and best practices around data management will hopefully limit future need for data rescue; these changes, however, do not apply retroactively. While rescuing data is not new, the term lacks formal definition, is often conflated with other terms (i.e. data reuse), and lacks general recommendations. Here, we outline seven key guidelines for effective rescue of historically collected and unmanaged datasets. We discuss prioritization of datasets to rescue, forming effective data rescue teams, preparing the data and associated metadata, and archiving and sharing the rescued materials. In an era of rapid environmental change, the best policy solutions will require evidence from both contemporary and historical sources. It is, therefore, imperative that we identify and preserve valuable, at-risk environmental data before they are lost to science.